Resolution, Precision and Accuracy

While reviewing and polishing my notes for another training course in Electrical/Electronic Measurements I thought I could share this important chapter. Because the terms Accuracy, Precision and Resolution are too often confused I spent more time on this chapter than on any of the others, trying to make it as clear as possible. Here it is, hoping you enjoy the ride:

Accuracy

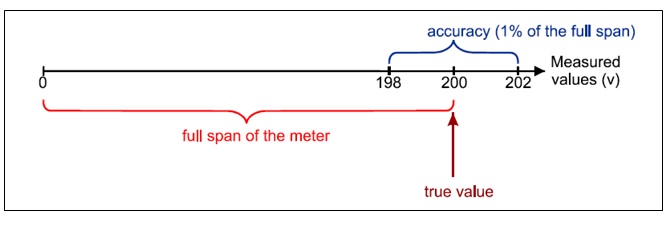

The accuracy of a measurement is nothing else than the difference between the measured value and the true value. Often it is indicated as a plus or minus a percentage of the readings or percentage of the full scale for a selected range. This parameter is specified for every measuring device in relation to the equipment errors only. Sometimes it might also specify some environmental conditions such as temperature, humidity etc…

Examples of accuracy specification:

+/- 1% of measured value

+/- 1% of full scale

Notice the difference between the two examples above. We will talk about this later.

This accuracy is not ambiguous. It just tells you how far you are from the goal! The figure below shows the accuracy of a measurement in relation to the full scale of the meter.

Precision

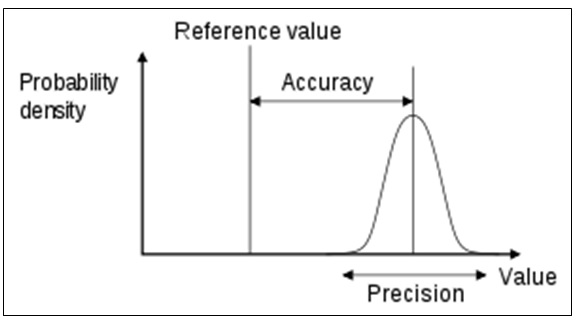

Precision is too often confused with Accuracy and many consider those two words as synonyms. However, precision has absolutely nothing to do with accuracy, except maybe that an accurate instrument is often precise as well (technology obliges!). Precision is the average value of a set of readings, taken repeatedly with the same measuring device in the same conditions. The deviation of the readings from the mean (average) value determines the precision of the instrument. The figure below illustrates this:

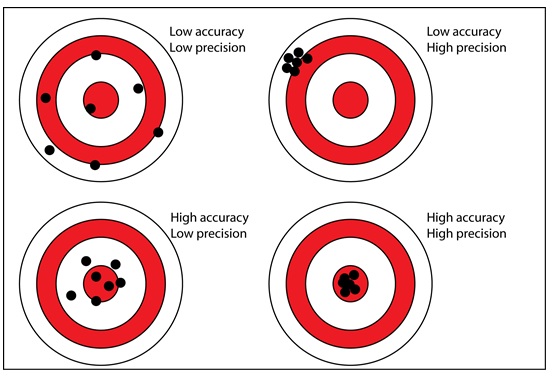

A measurement system can be accurate but not precise, precise but not accurate, neither, or both. The figure below better illustrates those differences:

Resolution

The resolution is the smallest change in input that the instrument can display for a particular range. Resolution is also often confused with accuracy but has nothing to do with it. Resolution is defined differently for analogue and digital meters, so we will discuss this separately for both types of instruments.

Now it’s time to see how these concepts apply to our measuring instruments:

Digital Meters

Digital multi-meters are now far more common but analogue multi-meters are still preferred in some cases; for example when monitoring a rapidly varying value. We will talk about analogue multi-meters later in this article.

Digital multi-meters (DMM, DVOM) display the measured value in numerals. Each numeral with possible values from 0 to 9 is called a digit.

The first digit on the display is called the Most Significant Digit (MSD).

The last digit on the display is called the Least Significant Digit (LSD).

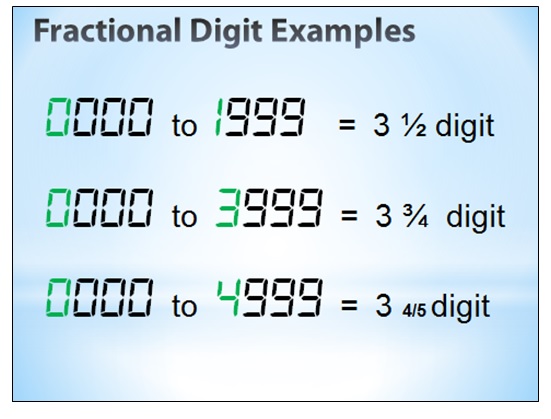

Digital meters are specified by the maximum number of digits they can display. It often consists of a number of full digits (each of them can display any number from 0 to 9) plus one fractional digit (1/2, 3/4, 4/5 etc…). This fractional digit is what often leads to confusion. It is a digit that can only display a few numbers (0 to 1 or 0 to 3 etc…). Usually the rule is as follows:

Fractional digit = a/b

a = maximum value the digit can attain

b= number of possible conditions

Examples:

½ = from 0 to 1 (max value = 1 with 2 possible conditions, 0 and 1)

3/4 = from 0 to 3 (max value = 3 with 4 possible conditions, 0, 1, 2 or 3)

4/5 = from 0 to 4 (max value = 4 with 5 possible conditions, 0, 1, 2, 3 or 4)

Unfortunately, some manufacturers do not follow this rule, leading to confusion… For that reason some manufacturers introduced another way of looking at the number of digits, the number of counts.

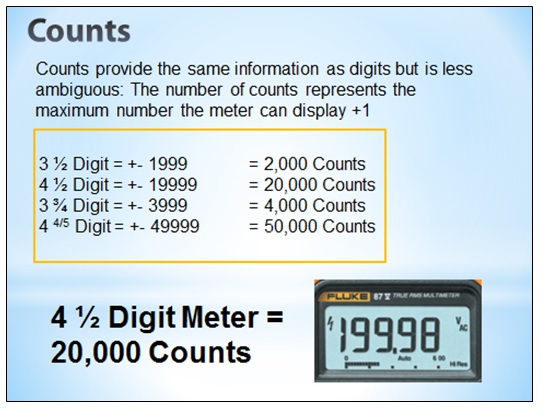

Counts

To avoid the possible confusion due to wrong interpretation of the fractional digit, manufacturers also provide the number of counts for a given instrument. This is exactly the same information as the number of digits but much easier to understand:

The number of counts represents the maximum number the meter can display +1 (ignoring the decimal point). For example a 3 ½ digit meter can display a number as big as 1999 so it will be rated 2,000 counts (1999 +1).

You may wonder why the +1… Many seem to believe that it is just to make it looking better (this is what I found from my intensive research using Google!). The reality is much simpler: because it starts counting from zero, there are 2000 possible numbers (counts) on a 0000 to 1999 display!

Digital Meter Resolution

People usually talk about a digital meter’s resolution in number of digits. You will find advertisings that says “This meter has a 3 ½ digit resolution” or “Meter Resolution: 3 ¾ digit” etc… It is not totally wrong but it is not totally correct either. Here is the official definition:

For example, a 20,000 counts meter (4 ½ digit) on its 100 V range will display up to 100.00V. The smallest value it can display on that range would be 0.01V. This is the resolution for this range. The same meter on the 10 V range will display 10.000 V. So its resolution for this range would be 0.001 V (the smallest value it can display on that range).

In reality the resolution is a function of the number of full digits. A 3 ¾ digit meter has the same resolution as a 3 ¼ digit meter as shown in the examples below.

A 3½ digit DMM’s (2,000 counts) maximum display on the 2 V range is 1.999V, with 1 mV (0.001 V) resolution.

A 3¾ digit DMM (4,000 counts) on the 4-V range can measure up to 3.999V, also with 1 mV (0.001 V) resolution

Both the 3½ digit and 3¾ digit meters offer 1 mV resolution, even though the 3¾-digit meter has twice the range of counts!

Sounds complicated? Just think about the smallest value the LSD can display for each range and you have the resolution.

Digital Meter Accuracy

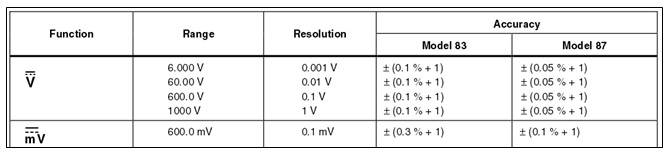

To start with, let’s have a look at the accuracy table of a Fluke Model 87 (4 ½ digits):

The table also includes the resolution for various ranges. Please note that it corresponds to the smallest value the LSD can display for each range as explained in the previous chapter.

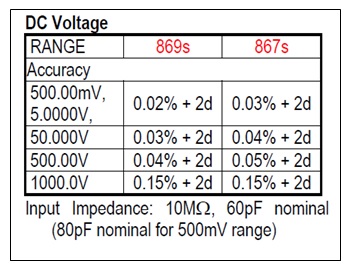

What about the accuracy? Here you can see that it is also specified for each range, although in this case they are all the same: +/- (0.05% + 1) – except for the mV range. But this is not necessarily the case for all the meters! Most meters will have different values of accuracy for each range. For example here is the DC Voltage Accuracy table of the Brymen BM867s (4 4/5 digits 50,000 counts):

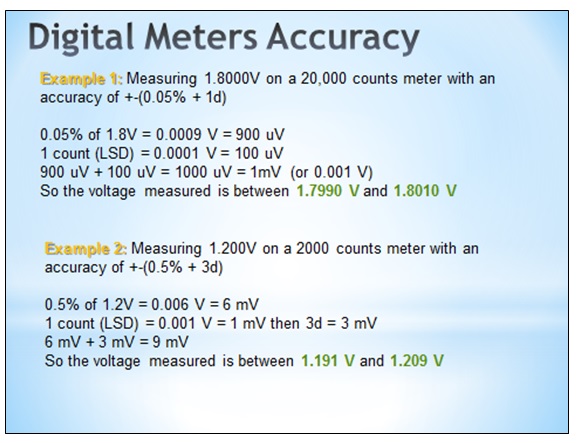

Now what is this “+1” or “+2d”, “+3d” etc… that you find in every analogue meter accuracy specifications? It means that we have to add the value of 1 or 2 or more “digits” to the accuracy of the reading. Confusing? Yes indeed it is! The word “digits” is very unfortunate in this case, it would be much better to use the word “LSD counts”. What you have to add to the percentage of the reading value is a number of counts of the Least Significant Digit for this range. Here below are two examples:

Analog Meter Resolution

Resolution is not often mentioned in Analogue multi-meters specifications. At least I couldn’t find any specification amongst the various models I surveyed. It is probably because we can find the resolution by looking at the scale divisions…

The resolution of an analog meter corresponds to the smallest scale subdivision for a particular range.

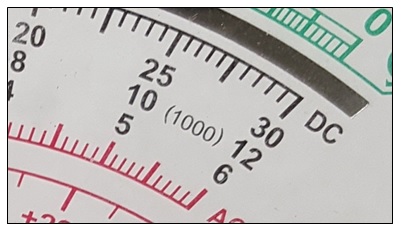

The resolution of the instrument above is 0.1 V for the 6VDC range, 0.2 V for the 12VDC range and 0.5V for the 30VDC range.

Analog Meter Accuracy

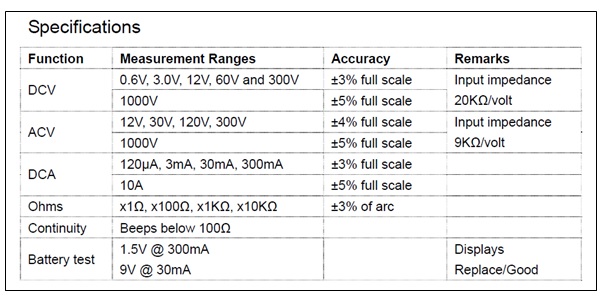

Analogue meters usually have their accuracy listed as a percentage of the full-scale reading. Let’s have a look at some real data. This is from a Tenma 72-8170:

As mentioned above, the accuracy is given as a % of full scale. Let’s see two examples:

On the 12 VDC scale the accuracy is +/- 3% of full scale (12V) = +/- 0.36 V

If you measure 10 V on this scale, the accuracy of your measurement will be:

+- (0.36/10) * 100 = +- 3.6 %

If you measure 5 V on the same scale, the accuracy will be:

+ – (0.36/5) * 100 = +- 7.2 %

That’s why measurements using analogue meters are more accurate when the pointer is closer to the full scale, at least ¾ of the full scale.

Sensitivity

Sensitivity is an absolute quantity, the smallest absolute amount of change that can be detected by a measurement. Please note that sensitivity should not be confused with resolution which is the smallest change in input that the instrument can display for a given range. Usually the sensitivity is a lower value than the smallest range resolution. Otherwise the LSD for this range would be meaningless… Digital instruments are very sensitive, sometimes too sensitive indeed. This is one of the reasons why we prefer using analogue instruments in some specific situations.

Sensitivity however is one of the most important characteristic of analogue multi-meters. This is because the meter must draw a certain amount of current from the circuit it is measuring in order for the pointer to deflect. The less current, the more sensitive the instrument is. The meter appears as another resistor placed between the points being measured. This characteristic is specified in terms of a certain number of Ohms per Volt (or Kilo-Ohms per Volt). The Ohm/Volt figure allows us to calculate the effective resistance for any given range. Just by multiplying this value by the range.

For example a multi-meter having a sensitivity of 20 KOhm/V will have a resistance of 200 KOhm on its 10 V range, or 2 MOhm on its 100V range etc…

The sensitivity for AC and DC is usually printed on the meter scale.

On the meter above we can see that the 50 uA and 0.1V DC scales are only one. What happen if we divide 0.1V by 50 uA? The result will be a resistance of 0.1/0.000050 = 2000 Ohm= 2 KOhm.

2 KOhm on a measuring range of 0.1 V = 20 KOhm/V… not surprisingly!

When making measurements the resistance of the meter should be at the very least ten times the resistance of the circuit being measured.

Conclusions

Multi-meters, digital and analogue, are the most important instruments in a repair workshop and there is a lot to gain in understanding their specifications. Any serious electronic technician/engineer will have more than one multi-meter so he/she can compare the measured values in case of any doubt.

Now you should not be paranoiac about it either. If you measure 4.85V at the output of a 5V power supply it doesn’t mean that the Power Supply is faulty! But it is nice to know if it is the voltage that is a bit low or your meter that needs calibration… On another hand, if you measure a point labeled “3.1 V +- 20 mV” in the service manual then you need to know if you can trust your multi-meter.

Seriously unbelievable.. the answer to most electronic related problems. Educational, informative, helpful guidance. What more can I say, I found it very helpful.

BalasHapus